Lab for System Informatics and Data Analytics (SIDA)

Research

1. Measurement and monitoring strategy:

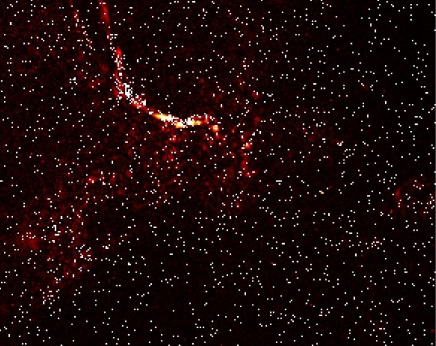

Measurement and monitoring strategy have been important and challenging topics in quality engineering. Due to the constraints of limited resources, practitioners often face the challenges in determining which variables to measure and how to leverage these measurements for better quality control in real time. My research group has been investigating systematic fixed and adaptive sensor system design and advanced process monitoring strategies for complex systems. Specifically, our recent focus is on the monitoring of high-dimensional streaming data via developing novel adaptive sampling, statistical process control and fault diagnosis algorithms. Our goal is to address the challenges, including heterogeneous data types with different sampling rates and data distributions, high computational cost, complex fault patterns, small signal-to-noise ratio, and resource constraints when dealing with such big data. The developed methodologies can be applied in many processes featured by massive and high-dimensional big data, such as in anomaly detection, unmanned vehicle surveillance, climate change monitoring, cybersecurity issues, and network traffic flow analysis. The use of this methodology has several advantages over other approaches, including significant decreases in computational cost, and extensive savings for physical sensors, data acquisition, and data transmission and processing time.

- Examples in natural science applications:

(a) |

(b) |

(c) |

(d) |

(e) |

(f) |

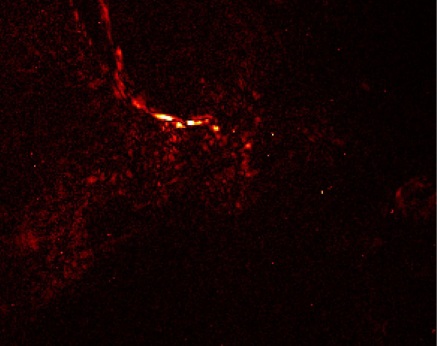

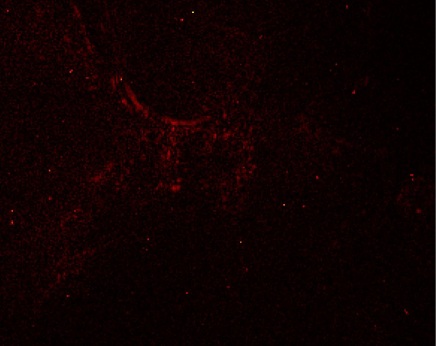

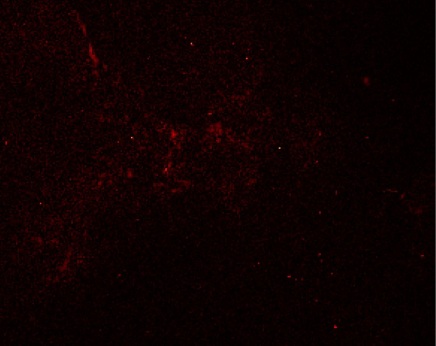

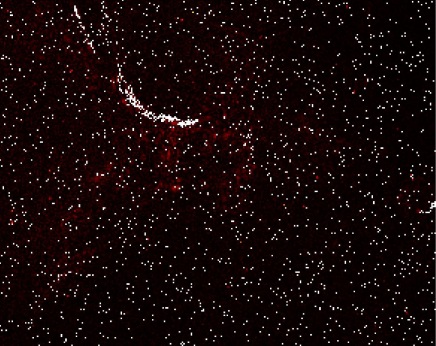

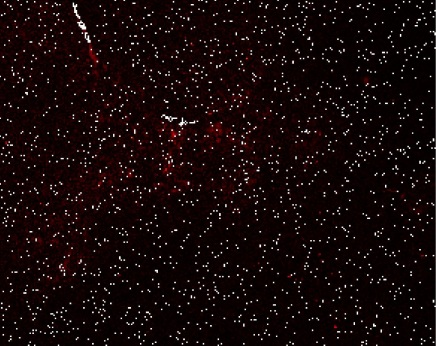

Figure 1. Real-time detection of solar flare based on a streaming video. Snapshots: (a) when the solar flare is brightest; (b) when the solar flare is nearly over; and (c) when the video is over. (d)-(f) are the corresponding measurement strategies using the developed adaptive sampling algorithm (i.e., only the white dots that account for 3% of the full information can be observed in each image).

- Examples in manufacturing applications:

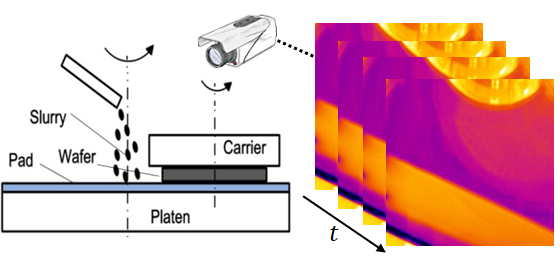

Figure 2. Real-time change detection based on wafer thermal profile in the CMP process.

- Examples in climate research:

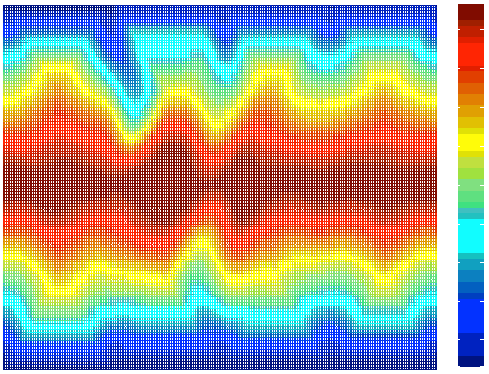

Figure 3. Real-time monitoring and saving strategy for large-scale climate simulations.

2. System degradation modeling, prognostics and decision making:

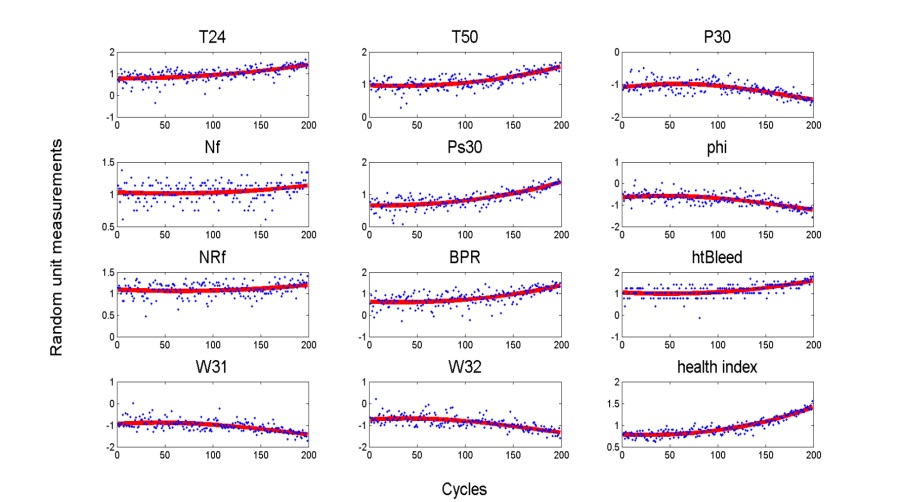

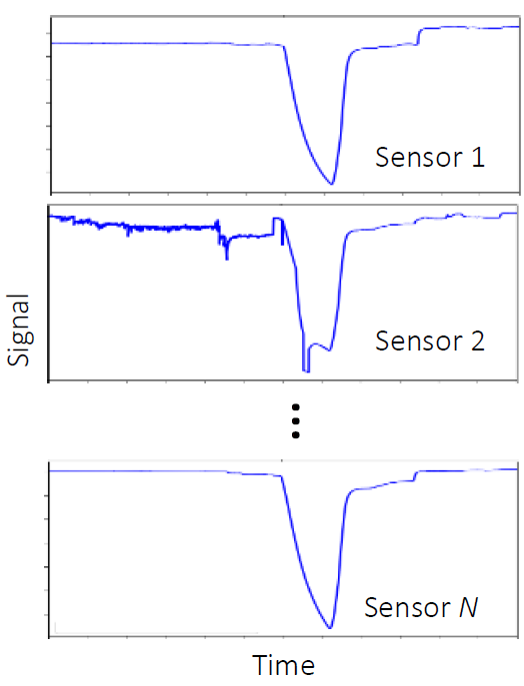

This research is motivated by the fact that a complex system has multiple sensors to monitor the system status. For a given failure, different sensors have different data types, acquisition rates, sensitivities, correlations and signal-to-noise ratios. For example, nowadays hundreds or even thousands of sensors are deployed in the aircraft engine to simultaneously monitor the performance in real time. Thus, methodologies need to be investigated to leverage such big data to better assess the current system status and predict its future behavior in real time. To address this challenge, my research group has been developing novel data fusion methodologies that select the best sensors and combine their information for more effective system degradation modeling, prognostic analysis and maintenance decisions. In particular, our recent focus has been on investigating advanced machine learning methods (e.g., deep learning and transfer learning) to better deal with smart and connected systems to enhance the model interpretability, prognostics and decision making. This research topic has a wide arrange of applications, such as in complex manufacturing and production systems, as well as in degenerative human diseases that require an accurate forecasting of future trends.

- Examples in manufacturing applications:

(a) |

(b) |

Figure 4. (a) Illustration of aircraft engine; and (b) Demonstration of the data fusion method for better characterizing the condition of the degraded engine.

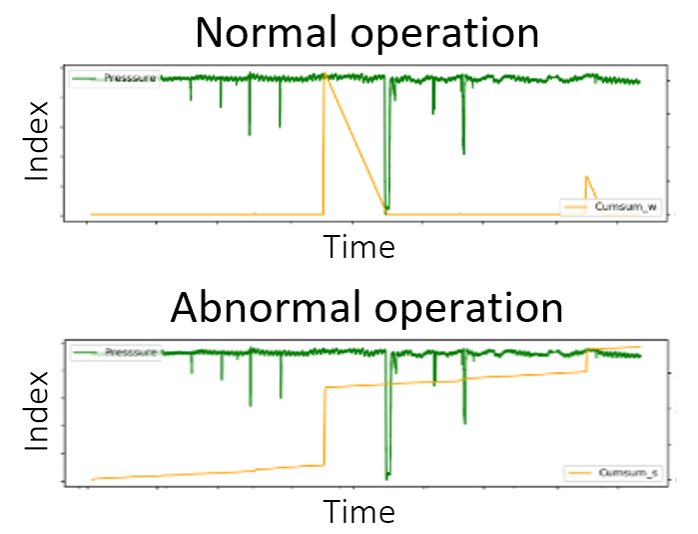

- Examples in nuclear applications:

(a) |

(b) |

Figure 5. (a) Illustration of multisensor signals; and (b) Demonstration of the developed index for accurately distinguishing the normal and abnormal operations under dynamic pressure changes.

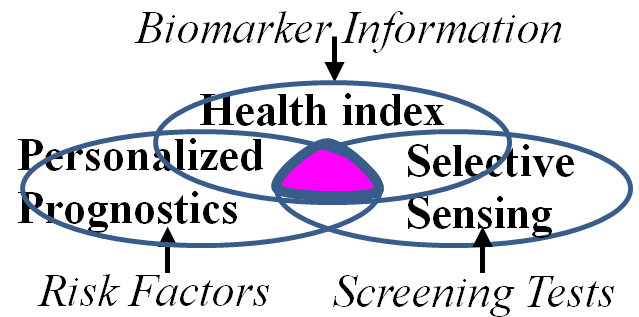

- Examples in healthcare applications:

(a) |

(b) |

Figure 6. (a) Illustration of normal aging process; and (b) Smart monitoring method for Alzheimer’s disease based on the developed data fusion prognostic model.

3. Other research topics in SIDA

(a) Edge computing for decentralized systems: Develop novel and effective AI algorithms and data transmission policies to enable decentralized anomaly detection and prognostic needs in Internet of Things (IoT) systems by leveraging the power of edge computing.

Figure 7. Illustration of the developed edge computing lab testbed.

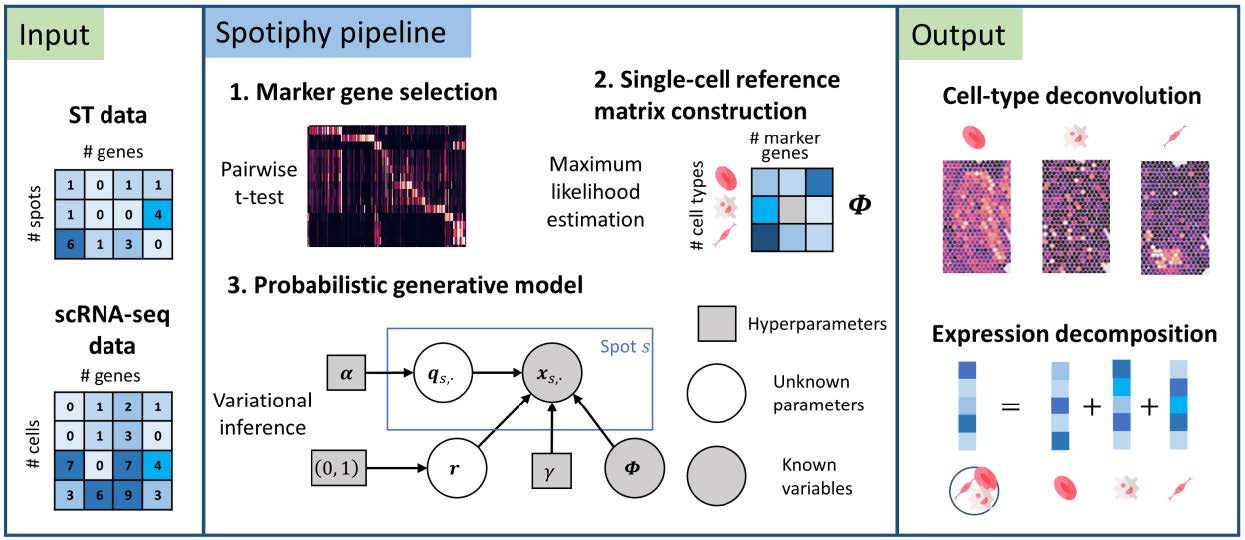

(b) Bioinformatics: Develop novel algorithms to analyze imaging-based spatial omics data and integrate single-cell and spatial omics data for cell-cell communication network inference.

Figure 8. Illustration of the proposed algorithm for revealing location-specific cell subtypes via transcriptome profiling at the single-cell level.

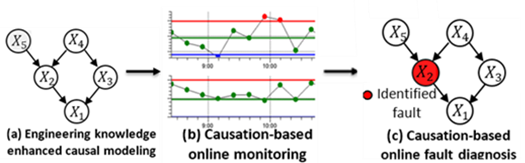

(c) Causation-based sensing, monitoring and diagnosis: Integrate causal relationships and multivariate statistical analysis to design cost-effective sensor allocation, monitoring and diagnostic schemems in complex manufacturing systems, such as the hot forming, cap alignment, and rolling processes

Figure 9. Illustration of causation-based sensing, monitoring and diagnosis.

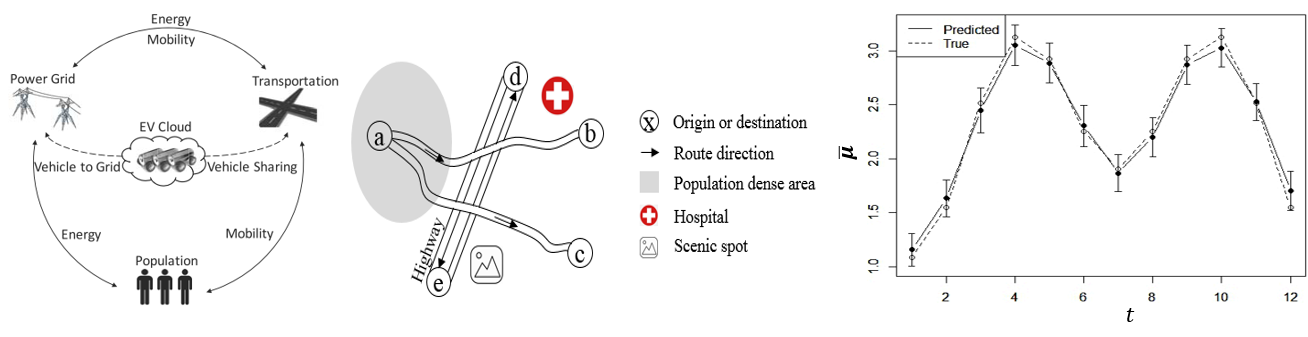

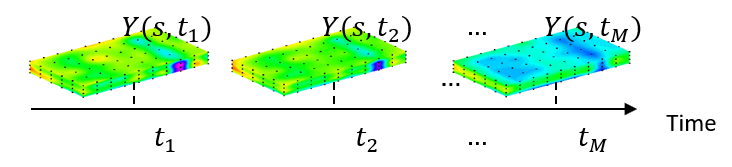

(d) Spatiotemporal field modeling and prediction: Integrate domain knowledge, physics-based models and data-driven spatiotemporal stochastic models to better charaterize the spatiotemporal correlations and predict the missing values in the complex systems, e.g., traffic demand network and grain thermal field

Figure 10. Illustration of real-time travel demand modeling and prediction in smart and connected cities.

Figure 11. Illustration of dynamic thermal fields modeling and prediction.

SIDA Lab greatly acknowledges the funding supports from National Science Foundation (CMMI-1362529; CMMI-1435809; CNS-1637772), Office of Naval Research (N00014-17-1-2261; N00014-23-1-2495), Air Force of Scientific Research (FA9550-18-1-0145; FA9550-20-1-0072), U.S. Army Engineer Research and Development Center (W912HZ20C0031), Department of Energy (DE-NE0008805; DE-NE0008993; DE-NE0009404; DE-NE0009443), National Institutes of Health, Toyota Material Handling North America, 3M